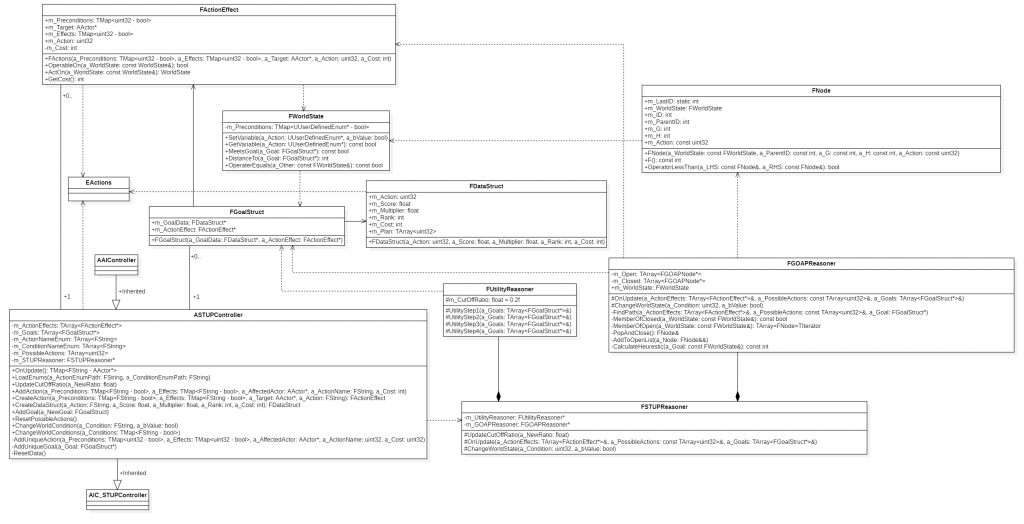

STUP AI in Unreal Engine 4

This post will go into detail about the Short Term Utility Planning which was mentioned in the Bewildered AI post.

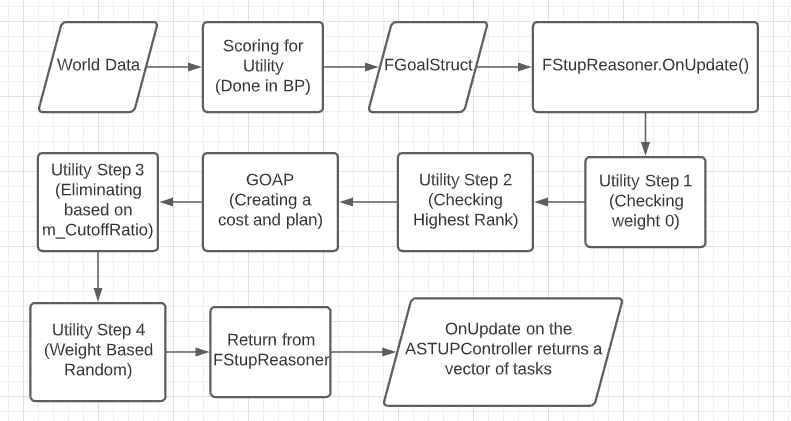

I will mainly be following the same order as that post, meaning that the below flowchart is important, I will also add the UML that a coworker and I made together for this AI.

This form of AI adds the principles of the GOAP and Utility-based AI together, to make plans for each possible action on each possible item. This means that we might get the getFood plan 6 times for 6 different sources, each scored differently.

This AI was also made in code meant for use in Unreal Engine 4, meaning that if there are some variables that could have been done differently it will most likely mean that we made this choice, because of the constraints put on the AI by Unreal Engine.

We chose to use an A* based pathfinding for GOAP, with Boolean variables. Since we already did some evaluating of things that were stored in any other format using the Utility part of STUP.

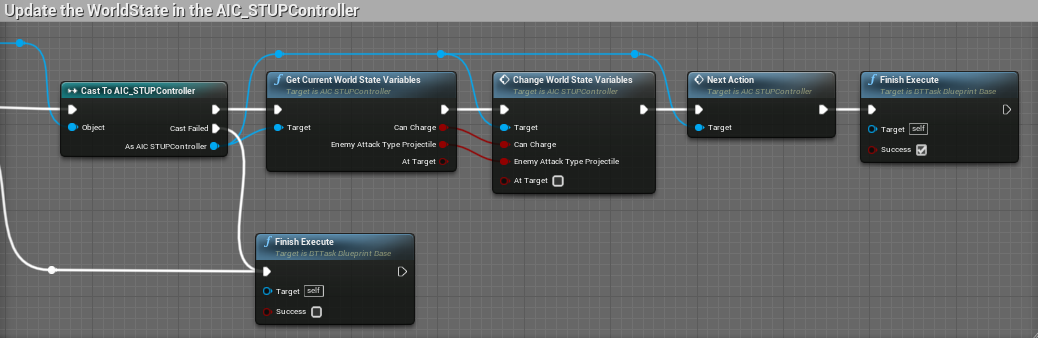

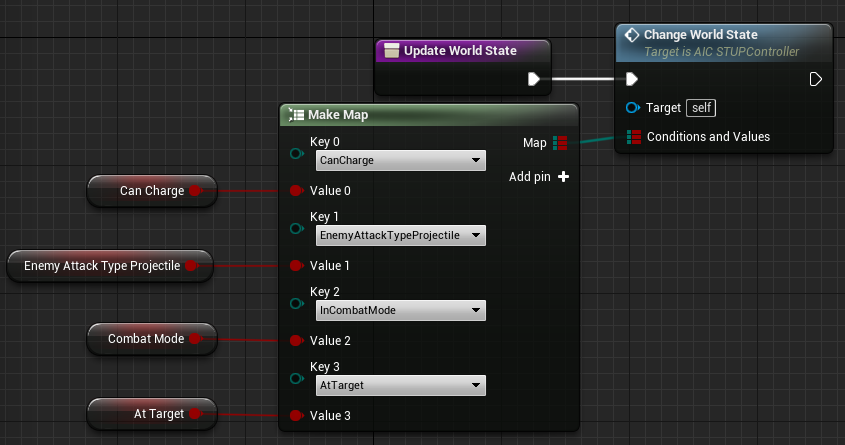

GOAP needs a world state to function since we had chosen to go with a Boolean based GOAP which means that we had to add it to the world state somehow, this is either done after an action finishes or in this case at the start of the execution of the AI

Next up we need to score the goals. This is done in 3 ways, using the score, multiplier, and rank method. The score is the direct influence of external factors, such as distance and fear. The multiplier is a bit more extreme and normally kept between 0 and 2, for example in the case of the mentioned getFood function the score can be influenced by distance, how busy it is with other tasks, and how long it has gone without food. While the multiplier will be how close it too starving to death, with a multiplier of 2, it will need to immediately get food. The Rank is slightly different from the other 2 and is a widely debated topic on which the best range of numbers to use. It is a way for the developers to still maintain a sense of control over the AI, for example, even if it is extremely tired, falling asleep during combat should never happen, which means that the rank of Sleeping is lower than the rank of any combat-related task.

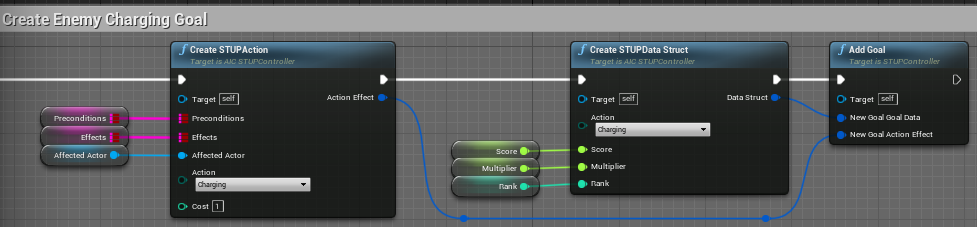

Once we have the score, we create the goals, this is done for each action that can be done, on each actor it can be done on. It takes an action, and a data struct, which together holds all the variables needed for the STUP algorithm to work.

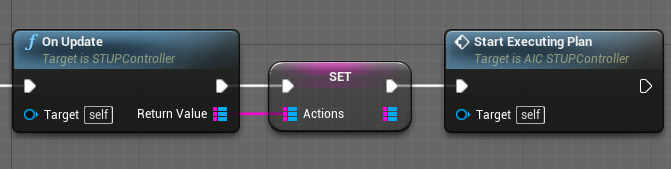

After all, goals have been created we move on to the OnUpdate function, which collects all goals and actions. Adds them to arrays and feeds them to the FSTUPReasoner, which then calls the other algorithms in the order it needs to.

Then we move to the first utility step, it is quite simple, it moves over all the goals, see if any of them have a score or multiplier lower than 0, and then remove that goal.

void FUtilityReasoner::UtilityStep1(TArray<FGoalStruct*>& a_Goals)

{

//Go backwards through the array

for(int currentGoal = a_Goals.Num() - 1; currentGoal >= 0; currentGoal--)

{

//If the Utility(score * multiplier) is lower or equal to 0 removeit

if(a_Goals[currentGoal]->m_GoalData.m_Multiplier <= 0.0f || a_Goals[currentGoal]->m_GoalData.m_Score <= 0.0f)

{

delete a_Goals[currentGoal];

a_Goals[currentGoal] = nullptr;

a_Goals.RemoveAt(currentGoal);

}

}

}After that, we will eliminate all goals that don’t have a rank higher or equal to the highest rank goal that we can find

void FUtilityReasoner::UtilityStep2(TArray<FGoalStruct*>& a_Goals)

{

//Go through array to determine the highest rank

int highestRank = 0;

for (FGoalStruct* currentGoal : a_Goals)

{

if (currentGoal->m_GoalData.m_Rank > highestRank)

{

highestRank = currentGoal->m_GoalData.m_Rank;

}

}

//Go backwards through the array and remove the ones that aren't the highest rank

for (int currentGoal = a_Goals.Num() - 1; currentGoal >= 0; currentGoal--)

{

if (a_Goals[currentGoal]->m_GoalData.m_Rank < highestRank)

{

delete a_Goals[currentGoal];

a_Goals[currentGoal] = nullptr;

a_Goals.RemoveAt(currentGoal);

}

}

}Then we move onto GOAP which is a slightly longer and more complicated process, it involves creating a possible plan from the current world-state to the end, by checking all possible actions from the current possible point, and then taking the cheapest action forward. Once we have then reached the goal, it uses A* to find the cheapest path to the goal. Which gets passed on.

// Check each FGOAPNode REACHABLE from Current -- in other words, where can we go from here?

for (const auto& PotentialAction : a_ActionEffects)

{

if (PotentialAction->OperableOn(Current->m_WS))

{

FWorldState Outcome = PotentialAction->ActOn(Current->m_WS);

// Skip if already closed

if (MemberOfClosed(Outcome))

{

continue;

}

// Look for a FGOAPNode with this FWorldState on the open list.

auto PotentialOutcomeNode = MemberOfOpen(Outcome);

if (!PotentialOutcomeNode) // not a member of open list

{

// Make a new node, with Current as its parent, recording G & H

FGOAPNode* Found = new FGOAPNode(Outcome, Current->m_G + PotentialAction->m_Cost,

CalculateHeuristic(Outcome, *a_Goal), Current->m_Id, PotentialAction->m_Action);

// Add it to the open list (maintaining sort-order therein)

AddToOpenList(MoveTemp(Found));

}

else

{ // already a member of the open list

// check if the Current G is better than the recorded G

if (Current->m_G + PotentialAction->m_Cost < (*PotentialOutcomeNode)->m_G)

{

(*PotentialOutcomeNode)->m_ParentId = Current->m_Id; // make Current its parent

(*PotentialOutcomeNode)->m_G = Current->m_G + PotentialAction->m_Cost; // recalculate G & H

(*PotentialOutcomeNode)->m_H = CalculateHeuristic(Outcome, *a_Goal);

(*PotentialOutcomeNode)->m_Action = PotentialAction->m_Action;

// resort open list to account for the new F

// sorting likely invalidates the p_outcome_node iterator, but we don't need it anymore

Sorting(m_Open);

}

}

}

} // Ending the adding of new nodes.// Is our Current state the goal state? If so, we've found a path, yay.

if (Current->m_WS.MeetsGoal(*a_Goal))

{

TArray<uint32> ThePlan;

TArray<AActor*> ListOfActionTargets;

uint32 TotalCost = 0;

do

{

ThePlan.Push(Current->m_Action);

TotalCost += Current->m_G;

auto Itr = m_Open.FindByPredicate([&](const FGOAPNode* n) { return n->m_Id == Current->m_ParentId; });

if (Itr == nullptr)

{

Itr = m_Closed.FindByPredicate([&](const FGOAPNode* n) { return n->m_Id == Current->m_ParentId; });

}

auto ActionEffect = a_ActionEffects.FindByPredicate([&](FActionEffect* n) { return n->m_Action == Current->m_Action; });

ListOfActionTargets.Push((*ActionEffect)->m_Target);

Current = *Itr;

} while (Current->m_ParentId != 0);

a_Goal->m_GoalData.m_Plan = ThePlan;

a_Goal->m_GoalData.m_Cost = TotalCost + a_Goal->m_ActionEffect.m_Cost;

a_Goal->ActorsForActions = ListOfActionTargets;

return;

}

We then move on to step 3 of Utility, where there is a chance that we eliminate a couple of actions, or nothing happens if we try to eliminate too many actions, the cutoff ratio should be data-driven, but our designers asked us if we could let them set that ratio.

void FUtilityReasoner::UtilityStep3(TArray<FGoalStruct*>& a_Goals)

{

//Get the highest utility per cost

float highestUtilityPerCost = 0.0f;

for(FGoalStruct* currentGoal : a_Goals)

{

float utility = currentGoal->m_GoalData.m_Score * currentGoal->m_GoalData.m_Multiplier;

float utilityPerCost = utility / currentGoal->m_GoalData.m_Cost;

if (utilityPerCost > highestUtilityPerCost)

{

highestUtilityPerCost = utilityPerCost;

}

}

//remove all the goals with a to low percentage of the highest utility per cost

TArray<uint16> CouldBeRemoved;

float TotalCutOffRatio = 0.0f;

for(int currentGoal = a_Goals.Num() - 1; currentGoal >= 0; currentGoal--)

{

float utility = a_Goals[currentGoal]->m_GoalData.m_Score * a_Goals[currentGoal]->m_GoalData.m_Multiplier;

float utilityPerCost = utility / a_Goals[currentGoal]->m_GoalData.m_Cost;

if(highestUtilityPerCost * m_CutOffRatio > utilityPerCost)

{

CouldBeRemoved.Push(currentGoal);

TotalCutOffRatio += utilityPerCost;

}

}

//If the total percentage of the utilityPerCost of tasks removed is greater than the cutOffRatio, those tasks should be kept.

if(highestUtilityPerCost * m_CutOffRatio > TotalCutOffRatio)

{

for(size_t toRemove = 0; toRemove < CouldBeRemoved.Num(); toRemove++)

{

delete a_Goals[CouldBeRemoved[toRemove]];

a_Goals[CouldBeRemoved[toRemove]] = nullptr;

a_Goals.RemoveAt(CouldBeRemoved[toRemove]);

}

}

}Once that is done we take a weight-based random to determine which plan should be executed.

void FUtilityReasoner::UtilityStep4(TArray<FGoalStruct*>& a_Goals)

{

//Get the total utility per cost

float totalUtilityPerCost = 0.0f;

for(FGoalStruct* currentGoal : a_Goals)

{

float utility = currentGoal->m_GoalData.m_Score * currentGoal->m_GoalData.m_Multiplier;

totalUtilityPerCost += utility / currentGoal->m_GoalData.m_Cost;

}

//Get a random number and use it to decide on the goal and remove the rest

//Might want to change randomNumber to a percentage based random number

float randomNumber = FMath::FRandRange(0.0f, totalUtilityPerCost);

float currentCombinedUtilityPerCost = 0.0f;

int goalToGoFor = 0;

for(int currentGoal = 0; currentGoal != a_Goals.Num(); currentGoal++)

{

float utility = a_Goals[currentGoal]->m_GoalData.m_Score * a_Goals[currentGoal]->m_GoalData.m_Multiplier;

currentCombinedUtilityPerCost += utility / a_Goals[currentGoal]->m_GoalData.m_Cost;

if(currentCombinedUtilityPerCost > randomNumber)

{

goalToGoFor = currentGoal;

break;

}

}

//Remove all the goals besides the one to go for

for (int currentGoal = a_Goals.Num() - 1; currentGoal >= 0; currentGoal--)

{

if (currentGoal != goalToGoFor)

{

delete a_Goals[currentGoal];

a_Goals[currentGoal] = nullptr;

a_Goals.RemoveAt(currentGoal);

}

}

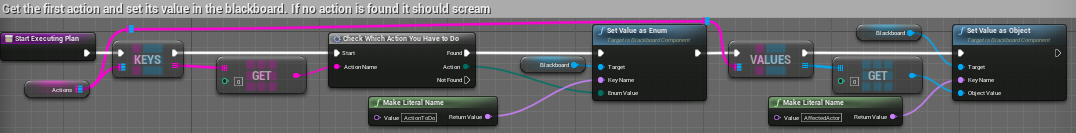

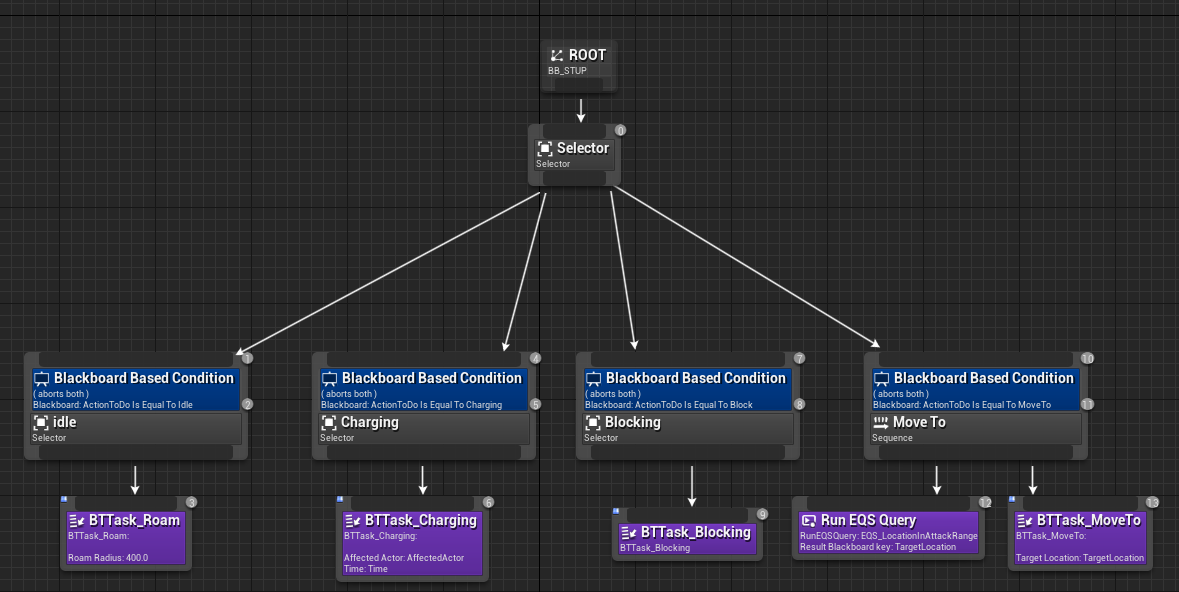

}Once that is done, the action that this goal was supposed to go for is added onto the plan, after which the plan is output as a map of which the keys are strings and the values are actors. Then we use the blackboard and behavior tree to push everything to the correct action.

We then call the update world-state again, which changes the world-state according to the given effects of the action. And then we call Next Action, which will set the blackboard variables correctly or call for a new plan if either the plan is empty or a certain amount of time has passed. After which we call the behavior tree again and follow up with a new task.