Bewildered AI

We are currently working on a game called Bewildered, it is a 3rd person exploration platformer-style game, where your goal is to restore an island to its former glory with the help of a monster who you are going to befriend along the way.

Link to the game: https://store.steampowered.com/app/1768540/Bewildered/

However, I mainly wanted to post this to talk about AI, since that was a unique form of AI, which I had only seen once, and as far as I know, it hasn’t been implemented into any Triple-A games.

The AI algorithm I’m talking about is Short Term Utility Planning or STUP for short. It combines two popular forms of AI into one, namely Goal Oriented Action Planning or GOAP, and Utility Theory-based AI, which will be shortened to Utility.

The benefits it has over GOAP is that it allows us to score the plans based on needs/wants and therefore simulate a more human way of picking a plan. It also makes up for what Utility is lacking by adding a planning element, Utility is really good for when you want to make human-looking decisions, but it absolutely sucks when going for long-term planning.

I originally got this idea from the youtube named Thoughtquake, where he discussed the AI he was going to use in his own game. And while his approach is a decent one, I did disagree with some of his points and will discuss how our AI works, and what differs from this video.

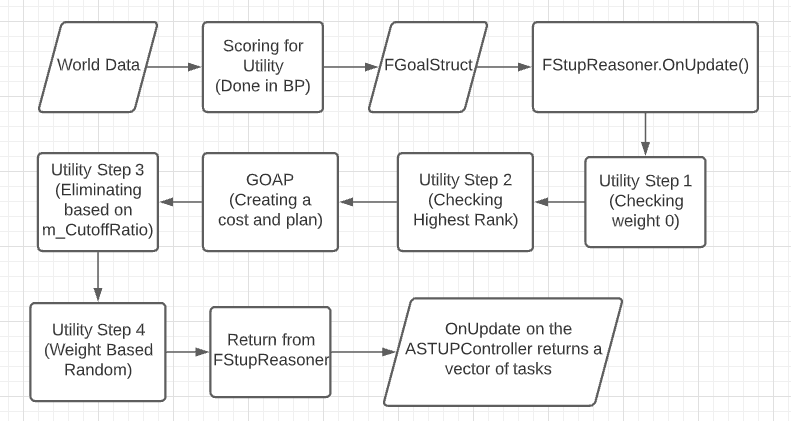

I want to start off with a flowchart of the path data will take throughout the algorithm, after which I will explain the steps using that flowchart. For those interested in a more technical view of the algorithm, with code snippets and detailed explanations about the AI, you can find that here: http://www.mikevandermaas.nl/stup-ai-in-unreal-engine-4/

We start with world data, this is used by the GOAP part of STUP to determine which actions we can or can’t take. Next is the scoring for utility, where we score how much we want to execute a certain plan. For example, if the AI is hungry the getFood, and eat goals get a higher score.

Those together add to an FGoalStruct. (The F, A, and m_ are because of Code conventions created by the group and Unreal Engine) The FGoalStruct is then taken from blueprints into code.

Next, we call the OnUpdate function which starts with creating a new plan by passing the data onto the separate algorithms. Here is where we differ from the video of Thoughtquake, he starts by creating a plan for each action, instead of already eliminating certain plans based on utility, which allows for less overall impact on performance.

Instead, we use the first step of utility to already root out plans which are physically unwanted or impossible, by checking if the score we gave it earlier is equal to or lower than 0.

After which we check for rank, this is a way Utility prioritizes certain actions over others. For example, in combat, you never want to suddenly go to sleep, which is why the sleep goal can get a rank of 1, while all combat-related actions get a rank of 10.

Then we use GOAP, we create a plan for each action that the AI might want to take, and give a cost for each of the plans, this means that we might end up with 5 plans, some of which are very cheap to do, while the others are more expensive.

We then use the third step of utility, again something that somewhat differs from the video, where Thoughtquake aborts plans as soon as they can’t be nearly as good as the current best, but one of the most important parts of the Utility Theory is the fact that it allows for some degree of randomness.

The paper named “Design Patterns for the Configuration of Utility-Based AI” has a nice way of explaining the third step, and also the reason why it is important for this to exist, while also explaining why we can’t eliminate plans unless they are not going to happen:

“Third, we eliminate options whose weight is significantly less than that of the best remaining option. The intent here is to eliminate options that would look stupid if selected.

The exact cutoff ratio to use in this step is data-driven, and varies depending on the decision being made. For example, if we’re choosing between two weapons, one with a weight of 5 and the other with a weight of 1, then we should probably not select the second weapon (doing so will look stupid). On the other hand, sometimes we have a large number of options that are very similar, whose collective weight is what’s important. For example, if we’re configuring a target selection AI for a sniper shooting at a platoon of Marines, we might want the probability of shooting the platoon leader to be roughly twice that of shooting one of the other Marines. Since there are roughly 40 enlisted Marines in a platoon, the probability of shooting each of them would only be about 1.25% that of shooting the platoon leader – far less than the 20% cutoff ratio given above. If we allow this step to eliminate those low weight options then the probability of shooting the platoon leader becomes 100%, which was not the intent.”

“Design Patterns for the Configuration of Utility-Based AI”

Then we use the weight-based random for the last step to determine the plan we are going to use. This will be done based on the Score per Cost, which allows something that we really want to happen, but is extremely expensive, to be valued the same as something we also want but not as much, but which is a lot cheaper to do.

Afterward the plan, which has become a single vector filled with actions to take, is output back into blueprints, where it can be picked up and executed.